Since I have my new role (Head of QA), many employees constantly want metrics from me. That means a lot of work for me. But since I do not always want to deal with such things, I have searched for a simpler way. So the question was – how can I deliver this data at any time and possibly from different sources (eq. JIRA, pipelines, test results, Salesforce, etc.)? Hmmm … Grafana is awesome – not only for DevOps! So in this tutorial series, I’d like to show you how to create nice and meaningful dashboards for your QA metrics in Grafana.

What you need?

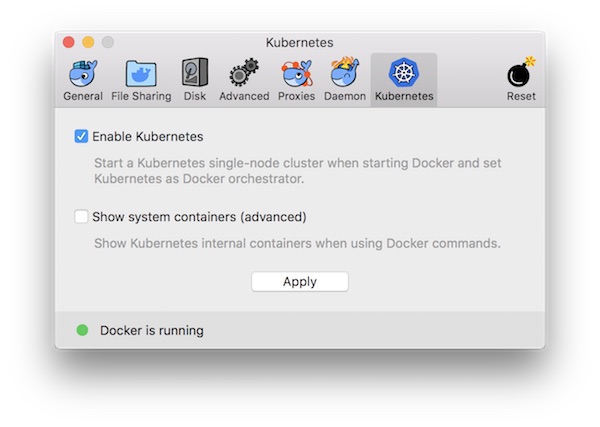

- Docker installed (latest version)

- Bash (min. 3.2.x)

Prepare the project

In order to create dashboards in Grafana, you need a small environment (Grafana/InfluxDB) as well as some data. The next steps will help you to create them. The environment/services are simulated by docker containers. For the fictitious data, just use the bash script which I created for this tutorial.

# create project $ mkdir -p ~/Projects/GrafanaDemo && cd ~/Projects/GrafanaDemo # create file docker-compose.yml $ touch docker-compose.yml # create file CreateData.sh $ touch CreateData.sh # change file permissions $ chmod u+x CreateData.sh

Now copy/paste the content of the two files with your favorite editor. The content of docker-compose.yml.

version: '3'

networks:

grafana-demo:

driver: bridge

ipam:

driver: default

config:

- subnet: 10.1.0.0/24

services:

influxdb:

container_name: influxdb

networks:

grafana-demo:

ipv4_address: 10.1.0.10

ports:

- '8086:8086'

image: influxdb

grafana:

container_name: grafana

networks:

grafana-demo:

ipv4_address: 10.1.0.20

ports:

- '3000:3000'

image: grafana/grafana

environment:

- 'GF_INSTALL_PLUGINS=grafana-piechart-panel'

depends_on:

- influxdb

And here the content of CreateData.sh.

#!/usr/bin/env bash

# shell options

#set -x

#set -v

set -e

set -u

set -f

# magic variables

declare -r OPTS="htsp"

declare -a TIMESTAMPS

declare -a OPTIONS=(false false false)

declare -r -a DATABASES=(test_db support_db pipeline_db)

declare -r -a TESTER=(Tina Robert)

declare -r -a SUPPORTER=(Jennifer Mary Tom)

declare -r -a STAGES=(S1 S2 S3)

declare -r -a BUILD=(SUCCESS FAILURE ABORTED)

declare -r -i SUCCESS=0

declare -r -i BAD_ARGS=85

declare -r -i NO_ARGS=86

# functions

function usage() {

local count

local file_name=$(basename "$0")

printf "Usage: %s [options...]\n" "$file_name"

for (( count=1; count<${#OPTS}; count++ )); do

printf "%s\tcreate %s and content\n" "-${OPTS:$count:1}" "${DATABASES[$count - 1]}"

done

exit "$SUCCESS"

}

function bad_args() {

printf "Error: Wrong arguments supplied\n"

usage

exit "$BAD_ARGS"

}

function no_args() {

printf "Error: No options were passed\n"

usage

exit "$NO_ARGS"

}

function create_timestamp_array() {

local counter=1

local timestamp

while [ "$counter" -le 30 ]; do

timestamp=$(date -v -"$counter"d +"%s")

TIMESTAMPS+=("$timestamp")

((counter++))

done

}

function curl_post() {

local url=$(printf 'http://localhost:8086/write?db=%s&precision=s' "$2")

curl -i -X POST "$url" --data-binary "$1"

}

function create_test_results() {

local passed

local failed

local skipped

local count

local str

for count in "${TESTER[@]}"; do

passed=$((RANDOM % 30 + 20))

failed=$((RANDOM % passed))

skipped=$((passed - failed))

str=$(printf 'suite,app=demo,qa=%s passed=%i,failed=%i,skipped=%i %i' "$count" "$passed" "$failed" "$skipped" "$1")

echo "$2: $str"

curl_post "$str" "$2"

done

}

function create_support_results() {

local items=(1 2 none)

local in

local out

local str

local count

for count in "${SUPPORTER[@]}"; do

in=$((RANDOM % 25))

out=$((RANDOM % 25))

str=$(printf 'tickets,support=%s in=%i,out=%i %i' "$count" "$in" "$out" "$1")

echo "$2: $str"

curl_post "$str" "$2"

done

}

function create_pipeline_results() {

local status

local duration

local str

local count

for count in "${STAGES[@]}"; do

status=${BUILD[$RANDOM % ${#BUILD[@]} ]}

duration=$(( 3+RANDOM%(3-17) )).$(( RANDOM%999 ))

str=$(printf 'pipeline,stage=%s status="%s",duration=%s %i' "$count" "$status" "$duration" "$1")

echo "$2: $str"

curl_post "$str" "$2"

done

}

function main() {

local repeat=$(printf '=%.0s' {1..80})

create_timestamp_array

for ((i = 0; i < ${#OPTIONS[@]}; ++i)); do

if [[ "${OPTIONS[$i]}" == "true" ]]; then

printf "Create database: %s\n" "${DATABASES[$i]}"

printf "%s\n" "$repeat"

curl -i -X POST http://localhost:8086/query --data-urlencode "q=CREATE DATABASE ${DATABASES[$i]}"

printf "Generate content of database: %s\n" "${DATABASES[$i]}"

printf "%s\n" "$repeat"

for item in "${TIMESTAMPS[@]}"; do

if [[ "${DATABASES[$i]}" == "${DATABASES[0]}" ]]; then

create_test_results "$item" "${DATABASES[$i]}"

fi

if [[ "${DATABASES[$i]}" == "${DATABASES[1]}" ]]; then

create_support_results "$item" "${DATABASES[$i]}"

fi

if [[ "${DATABASES[$i]}" == "${DATABASES[2]}" ]]; then

create_pipeline_results "$item" "${DATABASES[$i]}"

fi

done

fi

done

}

# script arguments

while getopts "$OPTS" OPTION; do

case "$OPTION" in

h)

usage;;

t)

OPTIONS[0]="true";;

s)

OPTIONS[1]="true";;

p)

OPTIONS[2]="true";;

*)

bad_args;;

esac

done

if [ $OPTIND -eq 1 ]; then

no_args

fi

# main function

main

# exit

exit "$SUCCESS"Start environment and create data

Once the project and the files have been created, you can build and start the environment. For this you use Docker Compose.

# validate docker-compose file (optional)

$ docker-compose config

# run environment

$ docker-compose up -d

# get IP from grafana container (optional)

$ docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' grafana

10.1.0.20

# get IP from influxdb container (optional)

$ docker inspect -f '{{range .NetworkSettings.Networks}}{{.IPAddress}}{{end}}' influxdb

10.1.0.10

# show created docker network (optional)

$ docker network ls | grep -i 'grafana*'

# show docker containers (optional)

$ docker ps -a | grep -i 'grafana\|influxdb'In next step you create the InfluxDB databases (incl. fictitious measurements, series and data) via Bash script.

# ping influxdb (optional) $ curl -I http://localhost:8086/ping # show help (optional) $ ./CreateData.sh -h # create databases and contents (execute only 1x) $ ./CreateData.sh -t -s -p # show current databases (optional) $ curl -G http://localhost:8086/query?pretty=true --data-urlencode "q=SHOW DATABASES" # show test_db series (optional) $ curl -G http://localhost:8086/query?pretty=true --data-urlencode "db=test_db" --data-urlencode "q=SHOW SERIES" # show test_db measurements (optional) $ curl -G http://localhost:8086/query?pretty=true --data-urlencode "db=test_db" --data-urlencode "q=SHOW MEASUREMENTS"

Note: You could use influx command to administrate InfluxDB directly.

# exec docker container $ docker exec -ti influxdb influx # list all databases > show databases # use specific database test_db > use test_db # show series of test_db > show series # show measurements of test_db > show measurements # drop measurements of test_db (in case something went wrong) > drop measurement suite

Okay the environment preparation is done. Now start Grafana in browser.

# open Grafana in browser $ open http://localhost:3000

The default username and password is “admin:admin“. Note, if you use docker-compose down you have to repeat most of steps like data creation. Better use docker-compose stop! … See you in 2nd part – where we add data sources and create dashboards.