This Tutorial is about some simple PHP QA Tools and Docker Jenkins. I will show near how to install PHP and PHP Composer in an Jenkins Alpine Linux Docker inclusive some needed Jenkins PlugIns.

Note

If you have an running Docker Container already which you cannot stop, you can install needed packages directly via:

# list containers (optional) $ docker ps -a # access running container as root $ docker exec -u 0 -it <Container Name> sh # install packages and exit container ...

Now you can use the same commented commands as provided via Dockerfile. Otherwise follow next steps.

Let’s go

# create new project $ mkdir -p ~/Projects/DockerJenkins && cd ~/Projects/DockerJenkins/ # create Dockerfile and plugins.txt $ touch Dockerfile plugins.xt # modify Dockerfile $ vim Dockerfile # modify plugins.txt $ vim plugins.txt

FROM jenkins/jenkins:lts-alpine

USER root

RUN apk update && apk upgrade

# install needed libary packages

RUN apk --no-cache add libssh2 libpng freetype libjpeg-turbo libgcc \

libxml2 libstdc++ icu-libs libltdl libmcrypt

# install needed PHP packages

RUN apk --no-cache add php7 php7-fpm php7-opcache php7-gd php7-pdo_mysql \

php7-mysqli php7-mysqlnd php7-mysqli php7-zlib php7-curl php7-phar \

php7-iconv php7-pear php7-xml php7-pdo php7-ctype php7-mbstring \

php7-soap php7-intl php7-bcmath php7-dom php7-xmlreader php7-openssl \

php7-tokenizer php7-simplexml php7-json

# Download and install composer installer

RUN php -r "copy('https://getcomposer.org/installer', 'composer-setup.php');"

RUN php -r "if (hash_file('SHA384', 'composer-setup.php') === '544e09ee996cdf60ece3804abc52599c22b1f40f4323403c44d44fdfdd586475ca9813a858088ffbc1f233e9b180f061') { echo 'Installer verified'; } else { echo 'Installer corrupt'; unlink('composer-setup.php'); } echo PHP_EOL;"

RUN php composer-setup.php

RUN mv composer.phar /usr/local/bin/composer

RUN chmod +x /usr/local/bin/composer

RUN rm -f composer-setup.php

USER jenkins

# install plugins from plugins.txt

COPY plugins.txt /usr/share/jenkins/ref/plugins.txt

RUN /usr/local/bin/install-plugins.sh < /usr/share/jenkins/ref/plugins.txt

checkstyle:3.50 analysis-core:1.95 dry:2.50 pmd:3.50 violations:0.7.11

That was it! Now build the image, start and work with jenkins.

# build image from Dockerfile $ docker build -t lupin/jenkins:lts-alpine . # list images (optional) $ docker images # start container $ docker run --name JenkinsPHP -p 8080:8080 lupin/jenkins:lts-alpine

Test

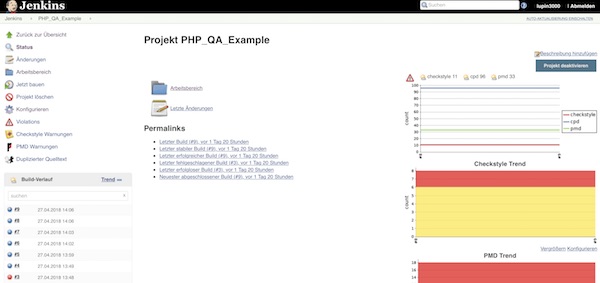

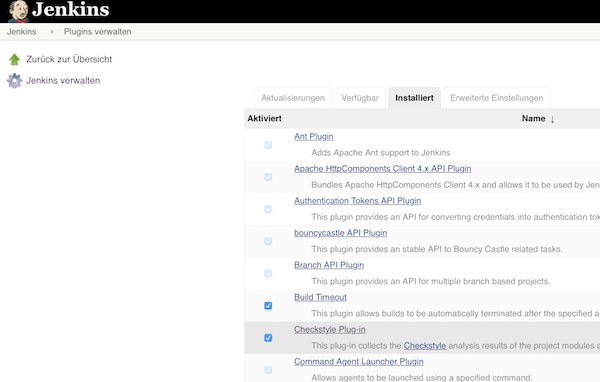

After starting, configuring and logging, you can see the already installed plugins in the Jenkins PlugIns!

To test, you can create a simple freestyle job. Here you configure the repository, build steps and post-build actions. After a few runs, the results should be visible on the project side.