I was a little surprised why there is no Vagrant plug-in for Vault. Then I thought no matter, because the Vagrantfile is actually a Ruby script. Let me try it. I have to say right away that I’m not a Ruby developer! But here is my solution which has brought me to the goal.

Prerequisite

Prepare project and start Vault

# create new project $ mkdir -p ~/Projects/vagrant-vault && cd ~/Projects/vagrant-vault # create 2 empty files $ touch vagrant.hcl Vagrantfile # start Vault in development mode $ vault server -dev

Here my simple vagrant policy (don’t do that in production).

path "secret/*" {

capabilities = ["read", "list"]

}And here is my crazy and fancy Vagrantfile

# -*- mode: ruby -*-

# vi: set ft=ruby :

require 'net/http'

require 'uri'

require 'json'

require 'ostruct'

################ YOUR SETTINGS ####################

ROLE_ID = '99252343-090b-7fb0-aa26-f8db3f5d4f4d'

SECRET_ID = 'b212fb14-b7a4-34d3-2ce0-76fe85369434'

URL = 'http://127.0.0.1:8200/v1/'

SECRET_PATH = 'secret/data/vagrant/test'

###################################################

def getToken(url, role_id, secret_id)

uri = URI.parse(url + 'auth/approle/login')

request = Net::HTTP::Post.new(uri)

request.body = JSON.dump({

"role_id" => role_id,

"secret_id" => secret_id

})

req_options = {

use_ssl: uri.scheme == "https",

}

response = Net::HTTP.start(uri.hostname, uri.port, req_options) do |http|

http.request(request)

end

if response.code == "200"

result = JSON.parse(response.body, object_class: OpenStruct)

token = result.auth.client_token

return token

else

return ''

end

end

def getSecret(url, secret_url, token)

uri = URI.parse(url + secret_url)

request = Net::HTTP::Get.new(uri)

request["X-Vault-Token"] = token

req_options = {

use_ssl: uri.scheme == "https",

}

response = Net::HTTP.start(uri.hostname, uri.port, req_options) do |http|

http.request(request)

end

if response.code == "200"

result = JSON.parse(response.body, object_class: OpenStruct)

return result

else

return ''

end

end

token = getToken(URL, ROLE_ID, SECRET_ID)

unless token.to_s.strip.empty?

result = getSecret(URL, SECRET_PATH, token)

unless result.to_s.strip.empty?

sec_a = result.data.data.secret_a

sec_b = result.data.data.secret_b

end

else

puts 'Error - please check your settings'

exit(1)

end

Vagrant.configure("2") do |config|

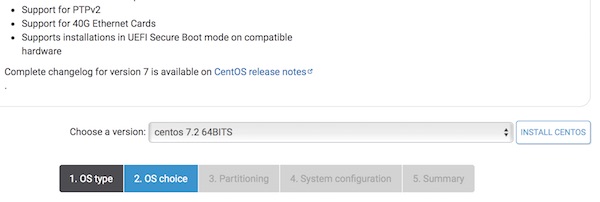

config.vm.box = "centos/7"

config.vm.post_up_message = 'Secret A:' + sec_a + ' - Secret B:' + sec_b

end

Configure Vault

# set environment variables (new terminal) $ export VAULT_ADDR='http://127.0.0.1:8200' # check status (optional) $ vault status # create simple kv secret $ vault kv put secret/vagrant/test secret_a=foo secret_b=bar # show created secret (optional) $ vault kv get --format yaml -field=data secret/vagrant/test # create/import vagrant policy $ vault policy write vagrant vagrant.hcl # show created policy (optional) $ vault policy read vagrant # enable AppRole auth method $ vault auth enable approle # create new role $ vault write auth/approle/role/vagrant token_num_uses=1 token_ttl=10m token_max_ttl=20m policies=vagrant # show created role (optional) $ vault read auth/approle/role/vagrant # show role_id $ vault read auth/approle/role/vagrant/role-id ... 99252343-090b-7fb0-aa26-f8db3f5d4f4d ... # create and show secret_id $ vault write -f auth/approle/role/vagrant/secret-id ... b212fb14-b7a4-34d3-2ce0-76fe85369434 ...

Run it

# starts and provisions the vagrant environment $ vagrant up

😉 … it just works