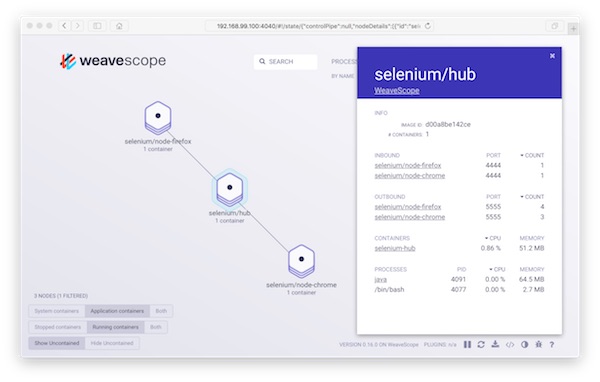

With Weave Scope you have in seconds a beautiful monitoring, visualisation & management for Docker and Kubernetes via your browser. I show with Docker-Selenium a simple example.

Preconditions

- docker-machine installed

Lets go…

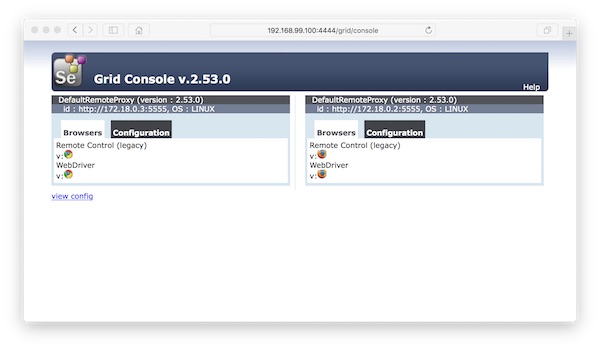

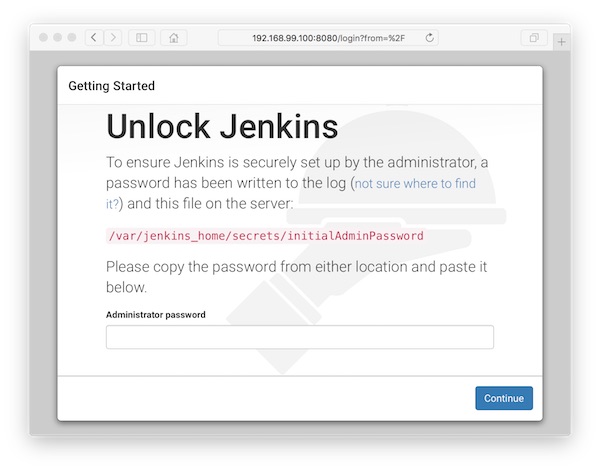

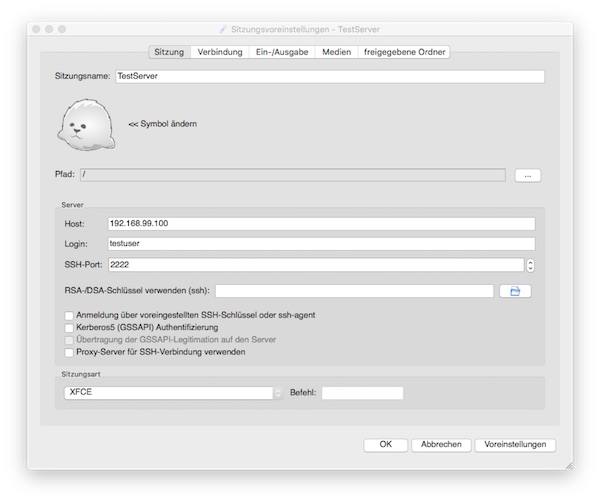

# create new Docker VM (local) $ docker-machine create -d virtualbox WeaveScope # pointing shell to WeaveScope VM (local) $ eval $(docker-machine env WeaveScope) # SSH into WeaveScope VM (local -> VM) $ docker-machine ssh WeaveScope # become root (VM) $ sudo su - # download scope (VM) $ wget -O /usr/local/bin/scope https://git.io/scope # change access rights of scope (VM) $ chmod a+x /usr/local/bin/scope # launch scope (VM) # Do not forget the shown URL!!! $ scope launch # exit root and ssh (VM -> local) $ exit # create Selenium Hub (local) $ docker run -d -p 4444:4444 --name selenium-hub selenium/hub:2.53.0 # create Selenium Chrome Node (local) $ docker run -d --link selenium-hub:hub selenium/node-chrome:2.53.0 # create Selenium Firefox Node (local) $ docker run -d --link selenium-hub:hub selenium/node-firefox:2.53.0 # show running containers (optional) $ docker ps -a ... CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a3e7b11c5a5f selenium/node-firefox:2.53.0 "/opt/bin/entry_point" 9 seconds ago Up 8 seconds cocky_wing 699bf05681e8 selenium/node-chrome:2.53.0 "/opt/bin/entry_point" 42 seconds ago Up 42 seconds distracted_darwin bbc5f545261b selenium/hub:2.53.0 "/opt/bin/entry_point" 2 minutes ago Up 2 minutes 0.0.0.0:4444->4444/tcp selenium-hub 9fe4e406fb50 weaveworks/scope:0.16.0 "/home/weave/entrypoi" 5 minutes ago Up 5 minutes weavescope

That’s it! Now start your browser and open the URL.