This tutorial serie should enable you to create own pipelines via Jenkins on AWS. Therefore we try to catch all needed basics with AWS IAM, EC2, ECR and ECS. Some of our configurations are recommended only for learning purpose, don’t use them on production! Why? Because these lessons are for people who starts on these topics and I will try to make all steps/configuration as easy as possible without focus on security. In this part we will create the environment and setup the “build step”.

Preconditions

- AWS account (eq. free tier)

- Git account (eq. GitLab, Bitbucket, GitHub, etc.)

AWS IAM

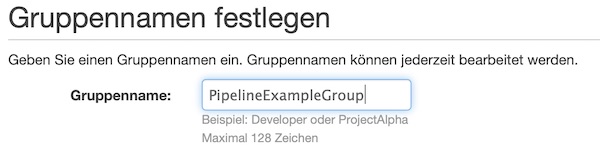

The first preparation you do on AWS IAM Management Console. Here you create and configure a new group. The benefit of this group is that you can reconfigure the policies for assigned users easily at anytime. Please name the group “PipelineExampleGroup”.

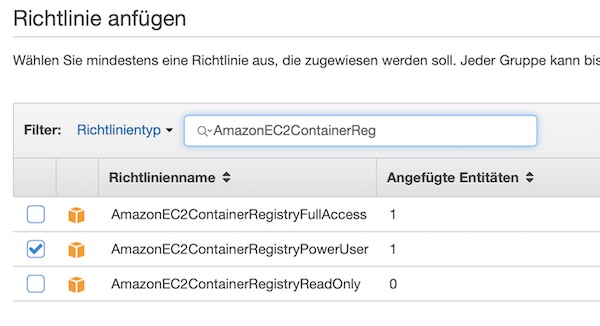

Now search for EC2 Container Registry policies and enable checkbox for “AmazonEC2ContainerRegistryPowerUser”. For our example this policy is enough, but for production please don’t do that!

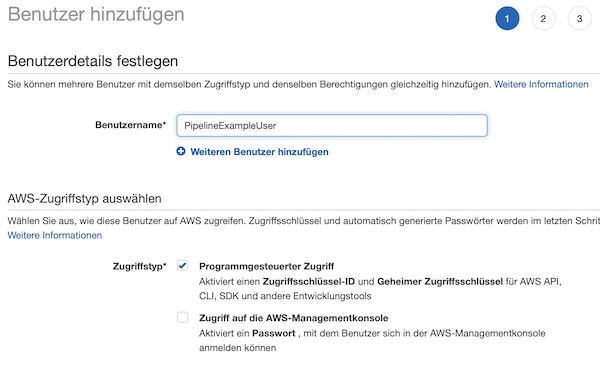

After the group is created, a user needs to be assigned to this group. Name the user “PipelineExampleUser”. Please enable checkbox “Programmatic access” for this user.

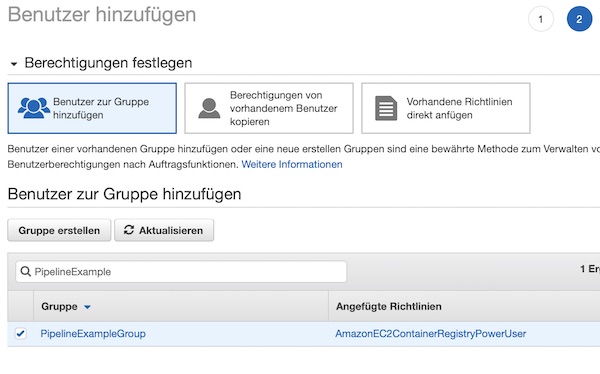

Assign the user to group.

Before you finish the process, please choose Download .csv and then save the file to a safe location.

AWS Jenkins EC2 Instance

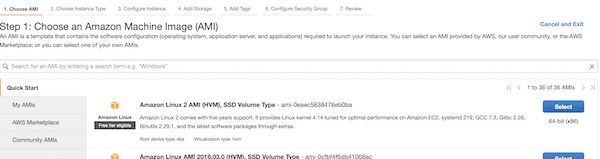

Now you can launch our EC2 instance. Do this on region “Frankfurt” (eu-central-1). Of course you can choose any other region, but please remember your choice later. At very first step select the template “Amazon Linux 2 AMI (HVM), SSD Volume Type”.

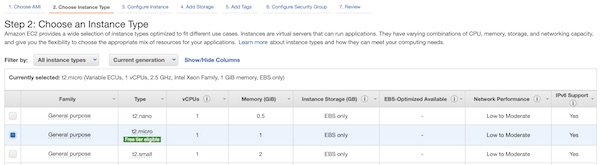

The instance type “t2.micro” is enough for our example. For production you will need something else – depending to your needs.

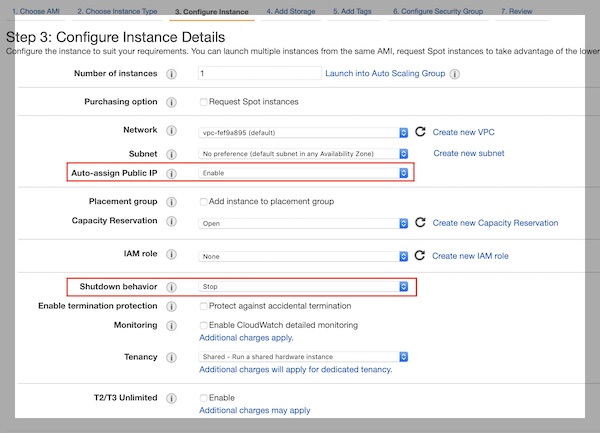

Now you need to be a little bit careful. On Instance Details step please select “Enable” for “Auto-assign Public IP” and “Stop” for “Shutdown Behavior”. For all other values the defaults should be fine. I select my default VPC and “No preference…” for Subnet.

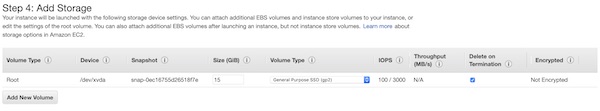

15 Gb disk space are fine. For production you need to estimate differently.

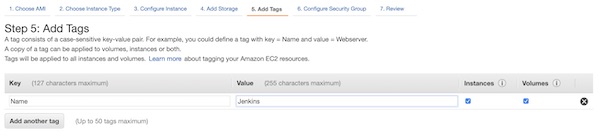

With the tag you will have it easier to identify the instance later on console view. Enter values “Name” for “Key” and “Jenkins” for “Value”.

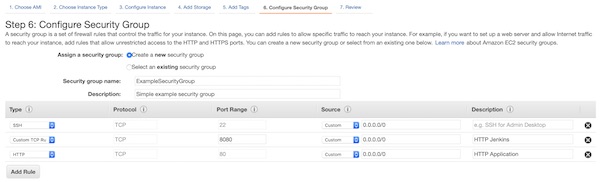

Create a new security group with name “ExampleSecurityGroup” and allow ports 22, 80 and 8080 (IPv4 only). You can change the configuration at any time later. On a production environment you should use other ports like 443 and IP restrictions.

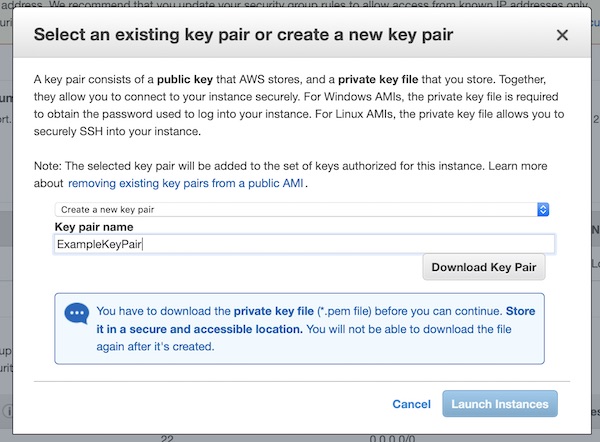

Create a new key pair with name “ExampleKeyPair”. Don’t forget to save the key (“Download Key Pair”) and press “Launch Instances”!

Install and run Jenkins

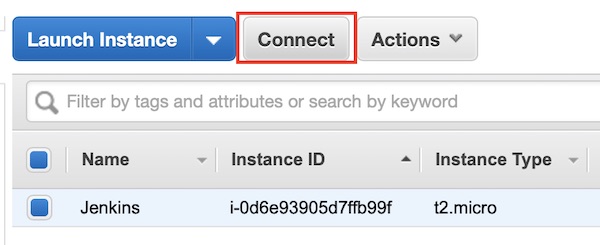

The EC2 instance is running and you can connect via SSH to start all needed installations and configurations. Attention: Your Public IP/DNS will be different (also after every stop/start), via button “Connect” you can easily figure out your configuration. I will just use the term “<EC2 IP|DNS>” in my description.

# move SSH keys (my are downloaded under Downloads)

$ mv ~/Downloads/ExampleKeyPair.pem.txt ~/.ssh/ExampleKeyPair.pem

# change permissions

$ chmod 0400 ~/.ssh/ExampleKeyPair.pem

# start ssh connection

$ ssh -i ~/.ssh/ExampleKeyPair.pem ec2-user@<EC2 IP|DNS>

# change to root user

$ sudo su -

# update system

$ yum update -y

# add latest Jenkins repository

$ wget -O /etc /yum.repos.d/jenkins.repo http://pkg.jenkins.io/redhat/jenkins.repo

# add key from Jenkins

$ rpm --import https://pkg.jenkins.io/redhat/jenkins.io.key

# install docker-ce

$ amazon-linux-extras install -y docker

# install java, git, jenkins and jq

$ yum install -y java git jenkins jq

# add jenkins to docker group

$ usermod -a -G docker jenkins

# enable and start docker

$ systemctl enable docker && systemctl start docker

# enable and start jenkins

$ systemctl enable jenkins && systemctl start jenkins

# get initial password

$ cat /var/lib/jenkins/secrets/initialAdminPassword

Note: I have a space after etc, because of security settings of my provider.

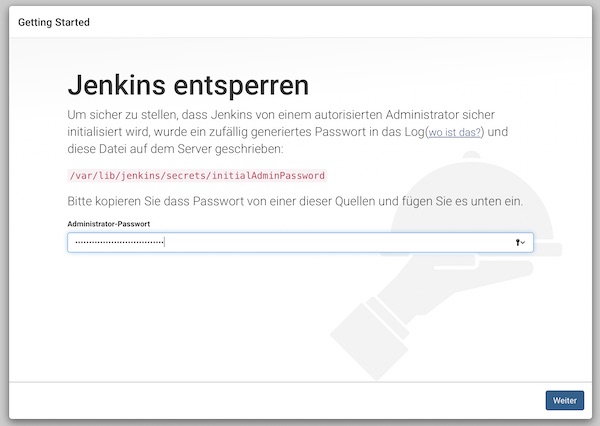

Do not close the SSH connection yet. Start your browser and following there the Jenkins installation steps. The URL is similar to your SSH connection – http://<EC2 IP|DNS>:8080. You should see the following screen and paste the initial password there.

On next screen press button “Install suggested plugins” and wait for the screen to create administrator account. Fill in your credentials and finish the installation steps. The remaining configurations (on browser) will be made later.

AWS ECR

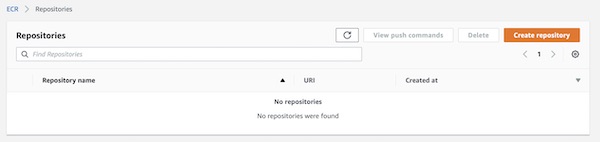

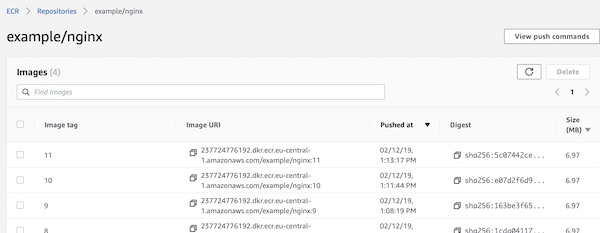

Before you can push images to ECR, you need to create a new repository. On the ECR page, choose button “Create repository”. Your AWS ECR console screen could look a little bit different.

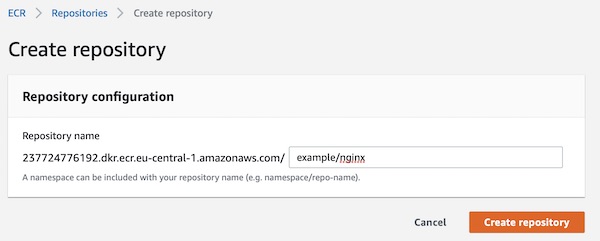

Give a repository name “example/nginx” and press button “Create repository”.

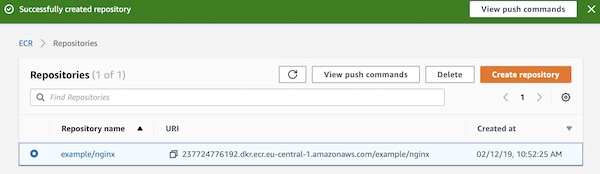

Done, your ECR repository is already created. You can see on overview page all needed informations like Repository name and URI. Your repository URI will be different to my. I will just use the term “<ECR URI>” in my description.

Okay, now enable user jenkins to connect to ECR. Go back to terminal and execute following steps. You need now the credentials from downloaded csv file for “PipelineExampleUser”.

# change to jenkins user

$ su -s /bin/bash jenkins

# show docker info (optional)

$ docker info

# configure AWS-CLI options

$ aws configure

...

AWS Access Key ID [None]: <credentials.csv>

AWS Secret Access Key [None]: <credentials.csv>

Default region name [None]: eu-central-1

Default output format [None]: json

...

# list repositories in registry (optional)

$ aws ecr describe-repositories

Git Repository

I assume that you are familiar with Git. You must now create a Git Repository and create the following folders and files there. I will use my own private GitLab repository.

# show local project tree (optional)

$ tree ~/<path to your project>

|____index.html

|____Dockerfile

|____.gitignore

|____cicd

| |____build.sh

| |____Jenkinsfile

| |____deploy.sh

| |____task_definition.json

| |____test.sh

|____dev_credentials

| |____credentials.csv

|____.git

Content of files in root folder:

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<title>DemoPipeline</title>

</head>

<body>

Hello world...

</body>

</html>

FROM nginx:stable-alpine

COPY index.html /usr/share/nginx/html/index.html

.DS_Store

dev_credentials/

Content of files in cicd folder:

pipeline {

agent any

parameters {

string(name: 'REPONAME', defaultValue: 'example/nginx', description: 'AWS ECR Repository Name')

string(name: 'ECR', defaultValue: '237724776192.dkr.ecr.eu-central-1.amazonaws.com/example/nginx', description: 'AWS ECR Registry URI')

string(name: 'REGION', defaultValue: 'eu-central-1', description: 'AWS Region code')

string(name: 'CLUSTER', defaultValue: 'ExampleCluster', description: 'AWS ECS Cluster name')

string(name: 'TASK', defaultValue: 'ExampleTask', description: 'AWS ECS Task name')

}

stages {

stage('BuildStage') {

steps {

sh "./cicd/build.sh -b ${env.BUILD_ID} -n ${params.REPONAME} -e ${params.ECR} -r ${params.REGION}"

}

}

stage('DeployStage') {

steps {

sh "./cicd/deploy.sh"

}

}

stage('TestStage') {

steps {

sh "./cicd/test.sh"

}

}

}

}

{

"family": "ExampleTask",

"containerDefinitions": [

{

"image": "URI:NUMBER",

"name": "ExampleContainer",

"cpu": 0,

"memory": 128,

"essential": true,

"portMappings": [

{

"containerPort": 80,

"hostPort": 80

}

]

}

]

}

Note: Please set permission rights for shell scripts like $ chmod +x build.sh deploy.sh test.sh

#!/usr/bin/env bash

## shell options

set -e

set -u

set -f

## magic variables

declare REPONAME

declare ECR

declare REGION

declare BUILD_NUMBER

declare -r -i SUCCESS=0

declare -r -i NO_ARGS=85

declare -r -i BAD_ARGS=86

declare -r -i MISSING_ARGS=87

## script functions

function usage() {

local FILE_NAME

FILE_NAME=$(basename "$0")

printf "Usage: %s [options...]\n" "$FILE_NAME"

printf " -h\tprint help\n"

printf " -n\tset ecr repository name\n"

printf " -e\tset ecr repository uri\n"

printf " -r\tset aws region\n"

printf " -b\tset build number\n "

}

function no_args() {

printf "Error: No arguments were passed\n"

usage

exit "$NO_ARGS"

}

function bad_args() {

printf "Error: Wrong arguments supplied\n"

usage

exit "$BAD_ARGS"

}

function missing_args() {

printf "Error: Missing argument for: %s\n" "$1"

usage

exit "$MISSING_ARGS"

}

## check script arguments

while getopts "hn:e:r:b:" OPTION; do

case "$OPTION" in

h) usage

exit "$SUCCESS";;

n) REPONAME="$OPTARG";;

e) ECR="$OPTARG";;

r) REGION="$OPTARG";;

b) BUILD_NUMBER="$OPTARG";;

*) bad_args;;

esac

done

if [ "$OPTIND" -eq 1 ]; then

no_args

fi

if [ -z "$REPONAME" ]; then

missing_args '-n'

fi

if [ -z "$ECR" ]; then

missing_args '-e'

fi

if [ -z "$REGION" ]; then

missing_args '-r'

fi

if [ -z "$BUILD_NUMBER" ]; then

missing_args '-b'

fi

## run main function

function main() {

local LAST_ID

# delete all previous image(s)

LAST_ID=$(docker images -q "$REPONAME")

if [ -n "$LAST_ID" ]; then

docker rmi -f "$LAST_ID"

fi

# build new image

docker build -t "$REPONAME:$BUILD_NUMBER" --pull=true .

# tag image for AWS ECR

docker tag "$REPONAME:$BUILD_NUMBER" "$ECR":"$BUILD_NUMBER"

# basic auth into ECR

$(aws ecr get-login --no-include-email --region "$REGION")

# push image to AWS ECR

docker push "$ECR":"$BUILD_NUMBER"

}

main

# exit

exit "$SUCCESS"

Inside folder “dev_credentials” I store the credentials.csv from AWS. The content of this folder will be only on my local machine, because via .gitignore I exclude the folder and files from git.

Jenkins job configuration

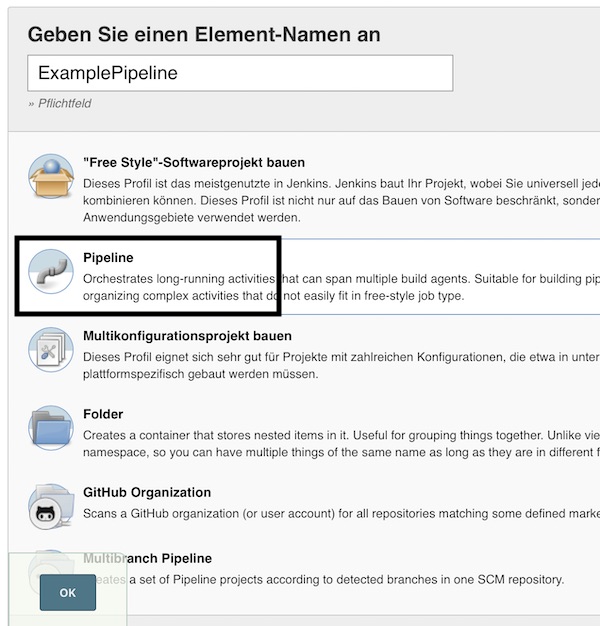

I will not use this tutorial to explain security topics for Jenkins, so we start directly with the configuration of the job (resp. project). On main page press now button “New item” or link “create new jobs”. Insert name “ExamplePipeline”, select “Pipeline” and press button “OK”.

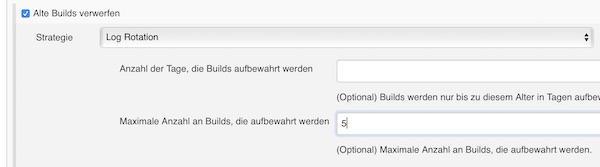

To save some disk space enable checkbox discard old builds (5 builds are enough).

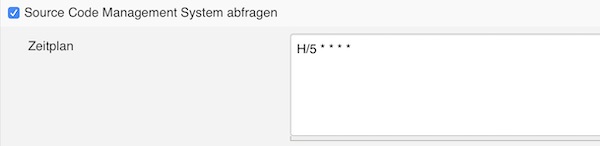

Normally you would create a webhook to trigger the build after commit, but our EC2 instance does change the public IP/DNS on every stop/start. That’s why here we check the revision changes every 5 minutes on git and trigger the job if something has changed.

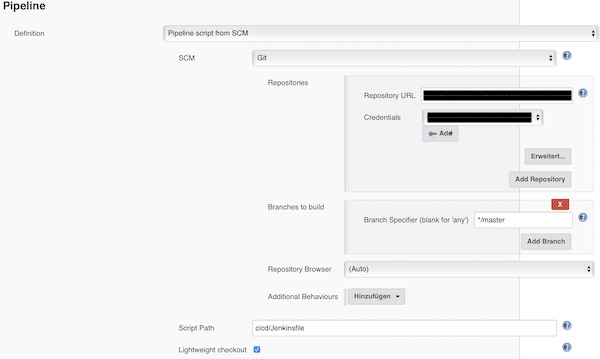

Add the repository (may credentials are needed), configure the branch and Jenkinsfile path.

Press button “save”, _cross fingers_ and trigger manual the build. If you did nothing wrong, the job will run without issues and the ECR contains your images (depending how often you trigger the build).

The next part of this tutorial series will be about deployment to ECS.